Dialogue Systems

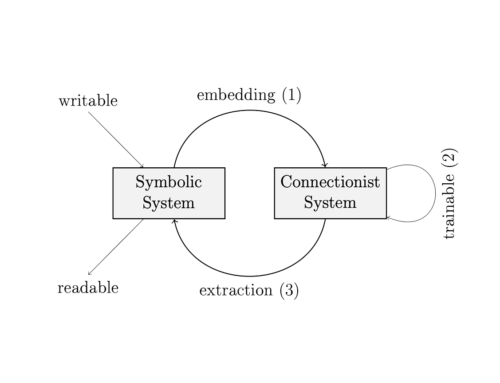

Dialogue systems can perform natural conversations with multiple turns. While this area has been a yardstick for Artificial Intelligence at least since the Turing Test was devised in 1950, popular interest has notably increased recently due to a rise of platforms and speech assistants such as IBM Watson, Google Assistant, Apple Siri and Microsoft Cortana. In my work, I am primarily interested in building systems, which combined modern deep learning approaches (e.g. Transformer architectures) with background knowledge. I believe that the capacity to perform natural and human-like conversations (as often exhibited by generative deep learning systems) should be combined with the ability query and structure background knowledge. This allows to build dialogue systems, which are not only impressive showcases, but are also informative and can be tailored for particular use cases.

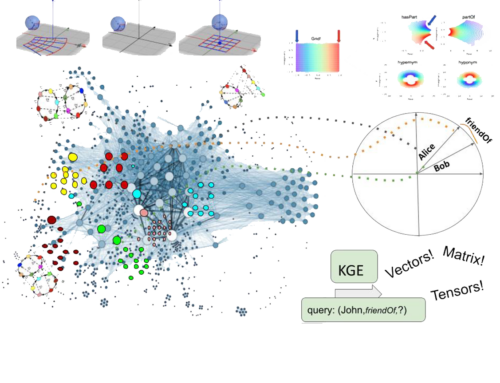

To achieve this vision, we contributed several pieces of research to the literature over the past few years. In work published at CoNLL in 2018, we created a dialogue system able to use background knowledge in a so called response selection setting, i.e. picking the correct response from a (large) set of pre-defined responses, which at this point achieved state-of-the-art performance in the Ubuntu Dialogue Corpus benchmark. We extended this in 2019 for generative dialogue systems (ESWC 2019 paper, ISWC 2019 paper) that used mechanisms, such as gating approaches, in order to decide when to use information from an underlying knowledge graph instead of the standard output vocabulary. For example upon the utterance “Do you know the home ground of Arsenal and its capacity?”, such a system could reply “Arsenal’s home ground is the Emirates Stadium with a capacity of 60 704.“, where the part in italics are queried from the knowledge graph. This allows to include domain-specific and changing knowledge into a generative system. This is useful, since the stadium name and capacity can change over time and, more generally, organisations running a dialogue system usually want to have the ability to update their domain-specific expert knowledge. These dialogue systems were extended resulting in KGIRNet which implements a knowledge-graph aware decoding procedure for Transformer architectures.

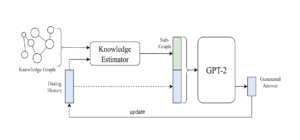

In a separate line of work, we investigated how knowledge graphs can be efficiently combined with large-scale language models, such as GPT-2 and GPT-3. As seen in the figure below, one natural idea is to include relevant parts of the knowledge graph in the context information along with the previous dialogue history. The Graph Attention Transformer archives this via a space efficient context encoding mechanism allowing to reduce the required by a factor of up to 3.6. User studies show that the resulting dialogues are more informative and interesting.

In my role as steering board member and co-leader of the future research track of the Fraunhofer speech assistant platform, we are aiming to put those ideas into practice. We cover the full pipeline of a speech assistant and can cover both simple and more complex use cases. In a talk in 2019, I outlined our ideas

Related Publications

Inproceedings

RoMe: A Robust Metric for Evaluating Natural Language Generation Inproceedings

In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), ACL 2022, Dublin, Ireland, May 22-27, 2022, pp. 5645–5657, Association for Computational Linguistics, 2022.

DialoKG: Knowledge-Structure Aware Task-Oriented Dialogue Generation Inproceedings

In: Findings of the Association for Computational Linguistics: NAACL 2022, Seattle, WA, United States, July 10-15, 2022, pp. 2557–2571, Association for Computational Linguistics, 2022.

Grounding Dialogue Systems via Knowledge Graph Aware Decoding with Pre-trained Transformers Inproceedings

In: The Semantic Web - 18th International Conference, ESWC 2021, Virtual Event, June 6-10, 2021, Proceedings, pp. 323–339, Springer, 2021.

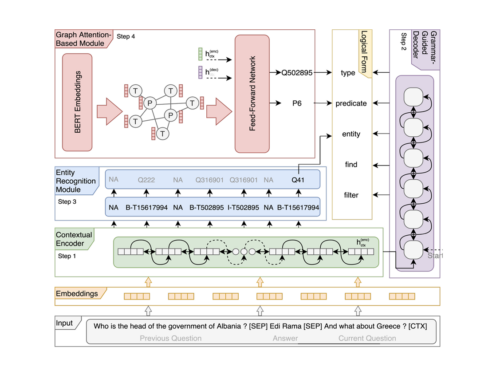

Context Transformer with Stacked Pointer Networks for Conversational Question Answering over Knowledge Graphs Inproceedings

In: The Semantic Web - 18th International Conference, ESWC 2021, Virtual Event, June 6-10, 2021, Proceedings, pp. 356–371, Springer, 2021.

Conversational Question Answering over Knowledge Graphs with Transformer and Graph Attention Networks Inproceedings

In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, EACL 2021, Online, April 19 - 23, 2021, pp. 850–862, Association for Computational Linguistics, 2021.

Space Efficient Context Encoding for Non-Task-Oriented Dialogue Generation with Graph Attention Transformer Inproceedings

In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, August 1-6, 2021, pp. 7028–7041, Association for Computational Linguistics, 2021.

Proxy Indicators for the Quality of Open-domain Dialogues Inproceedings

In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, EMNLP 2021, Virtual Event / Punta Cana, Dominican Republic, 7-11 November, 2021, pp. 7834–7855, Association for Computational Linguistics, 2021.

Language Model Transformers as Evaluators for Open-domain Dialogues Inproceedings

In: Proceedings of the 28th International Conference on Computational Linguistics, COLING 2020, Barcelona, Spain (Online), December 8-13, 2020, pp. 6797–6808, International Committee on Computational Linguistics, 2020.

Treating Dialogue Quality Evaluation as an Anomaly Detection Problem Inproceedings

In: Proceedings of The 12th Language Resources and Evaluation Conference, LREC 2020, Marseille, France, May 11-16, 2020, pp. 508–512, European Language Resources Association, 2020.

Using a KG-Copy Network for Non-goal Oriented Dialogues Inproceedings

In: The Semantic Web - ISWC 2019 - 18th International Semantic Web Conference, Auckland, New Zealand, October 26-30, 2019, Proceedings, Part I, pp. 93–109, Springer, 2019.

Incorporating Joint Embeddings into Goal-Oriented Dialogues with Multi-task Learning Inproceedings

In: The Semantic Web - 16th International Conference, ESWC 2019, Portorož, Slovenia, June 2-6, 2019, Proceedings, pp. 225–239, Springer, 2019.

Improving Response Selection in Multi-Turn Dialogue Systems by Incorporating Domain Knowledge Inproceedings

In: Proceedings of the 22nd Conference on Computational Natural Language Learning, CoNLL 2018, Brussels, Belgium, October 31 - November 1, 2018, pp. 497–507, Association for Computational Linguistics, 2018.