The Next 66 Years of Artificial Intelligence

[Disclosure: The post below reflects a personal opinion and is not linked in any way to my employer. Sources for images and quotes are at the end of the article.]

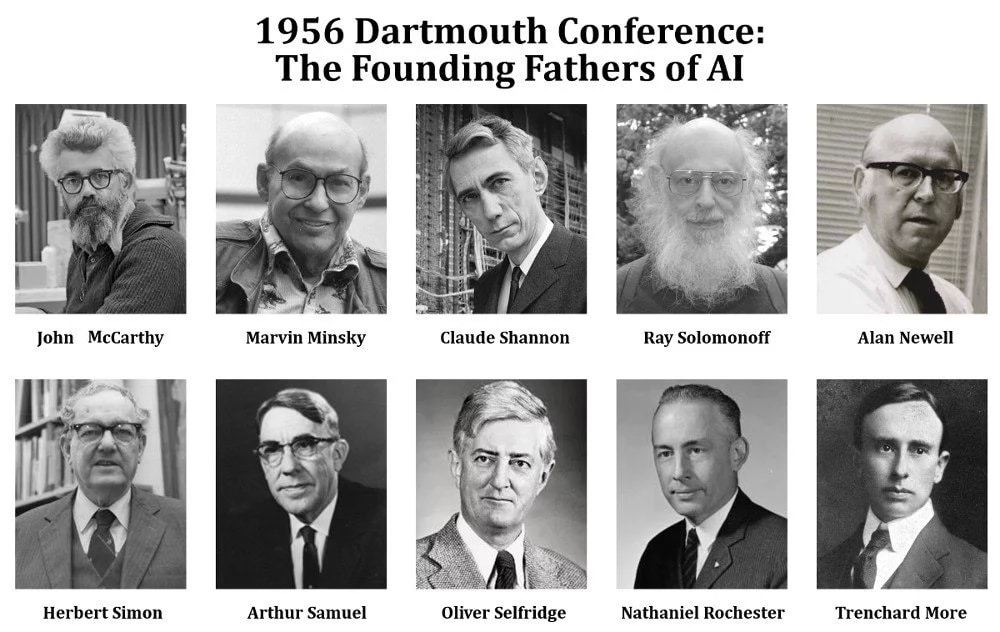

In 1956, a workshop took place at Dartmouth College (New Hampshire, USA) which is now widely considered to be the birth of Artificial Intelligence as a research field. Even though AI-based programs were written in the years prior to the workshop and Turing’s landmark paper on “Computing Machinery and Intelligence” already appeared in 1950, the workshop is credited with actually introducing the term “Artificial Intelligence”. The now-famous workshop proposal stated:

We propose that a 2-month, 10-man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer. [source: proposal by McCarthy, J., Minsky, M., Rochester, N., Shannon, C.E.]

Fast forward 66 years and we now know that it takes more than a summer to make significant progress in AI. Exactly how much AI has progressed since its inception is disputed. On the one side of the spectrum, there is a perception that general purpose AI is just around the corner if we can scale up existing technology. On the other side of the spectrum, the perception is that little substantial progress on fundamental issues has been made in the past decades and several paradigm shifts are needed to achieve general purpose AI. In fact, several topics discussed 66 years ago in the Dartmouth workshop are still active areas of research such as language understanding, neural networks and creative intelligence. Undeniably, AI had remarkable achievements in this time span, such as achieving superhuman performance in a wide range of games, revolutionising natural language processing and computer vision as well as recently shifting more towards important practical problems such as protein structure prediction. Given the whirlwind of announcements related to AI and the sheer insurmountable amount of papers published in the field, it seems that it is almost unpredictable how AI will develop over the next few years, let alone decades. There is an almost universal fear among AI researchers in academia that you need to be quick in whatever you work to stay ahead of the innovation curve. Understandably, researchers and practitioners in the field can feel overwhelmed and it is easy to fall back into a myopic view of the field in which only the previous and next few years are considered. This is one of the reasons why I decided to create this blog post giving some thoughts to the more long-term implications of AI and why they matter for us now.

If we could fast forward another 66 years to the year 2088, which state would AI be in? What effect will it have on humanity? I can see three (not disjoint) main scenarios for advanced AI systems that I am describing below for which I believe it is worth being conscious about them even now. Naturally, this assumes that by 2088 climate change as well as political or economical crises haven’t affected our planet(s) to the point that (artificial) life on Earth (or other nearby planets) has become impossible.

Scenario 1: AI takeover

This is a posthumanist scenario, elaborated in detail by Nick Bostrom, Ray Kurzweil and others. The assumption here is that AI eventually achieves and surpasses the conjecture of the Dartmouth workshop quoted above. For AI systems to replace humans, not only would AI methods need to outperform human intelligence across a wide range of tasks, but humans would also need to – deliberately or accidentally – let AI systems replace them. This is a real risk and was recognized early on by experts in the field:

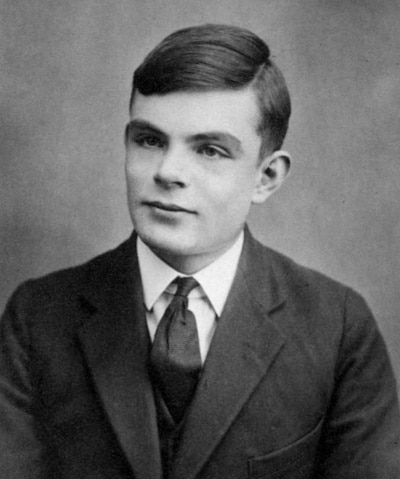

Alan Turing in a 1951 lecture in Manchester:

“It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. … At some stage, therefore, we should have to expect the machines to take control”

Marvin Minsky in 1984:

“The problem is that, with such powerful machines, it would require but the slightest accident of careless design for them to place their goals ahead of ours”

Opposed to this, some scholars like David Lesley are not convinced that we will ever see the arrival of a second intelligent species. I have personally not seen any strong argument supporting this view. While strong AI will likely need more than just scaling of existing methods to more data and compute power, recent progress in language modelling, robotics and computer vision⎻even if by itself probably insufficient⎻indicates that strong AI is less than 66 years away. Strong AI alone does not automatically mean a post-humanist scenario as I explain in the third scenario below and could, on the contrary, improve the quality of life and well-being of humans significantly.

If this scenario were to happen, is there some light in the gloomingly dark perspective of a post-humanist scenario? Even though an end to the human race seems to be one of the most catastrophic outlooks we can think of, some post-humanists argue (or at least hope) that being replaced by a superior intelligence opens the path towards a better future. In this future, several failures of humanity may not be repeated by superior AI systems. For example, artificial intelligence might have a better chance to coordinate globally to protect the climate and save the environment. Higher intelligence might also lead to less war and political crises, because there might be better mechanisms for machines to arrive at solutions not needing worst-case escalations and suffering. However, there is a fundamental problem with this view: We are currently very far from being able to understand or control the behaviour of AI systems. The behaviour of artificial intelligence is quite different to the behaviour of “natural” intelligence. Without understanding it fully, handing over too much control to AI systems without proper guardrails can lead to catastrophic failures. It is important to realise that this is not a problem which only becomes relevant in several decades from now, but is already influencing us today. For example, AI algorithms screening resumes or deciding about bail and parole have been shown to treat Black and White people differently. Some mortgage applications and medical tests are screened by machine learning systems to improve efficiency⎻but the algorithms can often not explain their judgement and it could be based on spurious correlations or simply outdated information. Autonomous vehicles might be prone to adversarial attacks on road signs leading to accidents. So while AI has a huge potential for positive change, the lesson already learned over the past decade is that the more control we give to an AI system the better we need to understand it. While it might not be that important to understand the prediction of an AI method for the outcome of a soccer game, letting AI systems assign credit scores to persons or automating legal judgement critically requires a high level of understanding.

Scenario 2: Physical integration of AI & humans

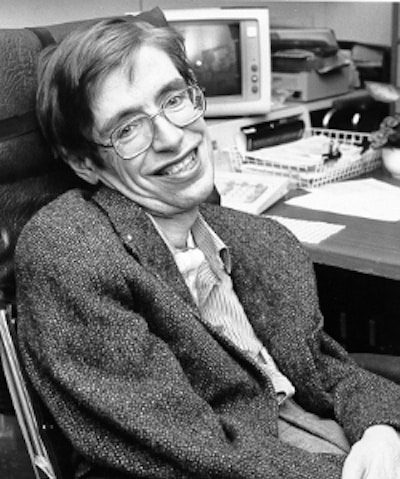

A somewhat milder scenario than the above outlined post-humanist scenario by AI takeover is the so called transhumanist view: Humans won’t be replaced by AI but some parts of us become biomechatronic. This vision is heavily exploited in TV shows, but is now becoming a more realistic perspective. Neuralink is, for example, aiming to build a generalised brain device to solve several problems in human brains (such as addiction or anxiety) by implanting chips in the brain. Such efforts may ultimately lead to a much higher bandwidth channel between humans and AI systems. This could, in principle, solve many limitations of our human bodies. Improvements to humans can be used to both treat diseases by AI systems taking over critical functionality, but can also be used to enhance already healthy people. Physicist Stephen Hawking argued (see quote below) that at least a fraction of humankind would not be able to resist such improvements of human characteristics. This can lead to “super humans” who improve at a rapid rate.

While there is no doubt that this opens up opportunities to improve our lives, it also poses risks: For example, the most natural channel for us humans to communicate (to both other humans and machines) is voice, language and gestures. Using thoughts or other brain stimuli to control AI systems is something that is not very natural if it concerns any system that we do not consider to be part of our own body. This means that, similar to the transhumanist scenario outlined above, any control of an AI system that influences aspects outside of (the organic part of) our own body needs to be extremely well understood before being applied in practice.

This is particularly critical for all systems in which the communication between human organic and biomechatronic body parts is bidirectional, e.g. AI systems being able to directly fire neural signals that make human organic parts move and act as desired by the system. This can be further emphasised if neurally enhanced humans can communicate with each other. This type of communication is likely to be significantly faster and more efficient than voice. It could lead to humanity becoming a single organism connecting a large number of human brains and AI systems. Such systems could take global actions. While this could, in principle, be used to better combat global problems, any flaw might have disastrous consequences for human life and it would be unwise to use those techniques without understanding them in depth.

Stephen Hawking in 2006:

“[…] some people won’t be able to resist the temptation to improve human characteristics, such as size of memory, resistance to disease, and length of life. Once such super humans appear, there are going to be major political problems, with the unimproved humans, who won’t be able to compete. Presumably, they will die out, or become unimportant. Instead, there will be a race of self-designing beings, who are improving themselves at an ever-increasing rate.”

Elon Musk, interviewed in 2017:

“You’re already digitally superhuman. The thing that would change is the interface—having a high-bandwidth interface to your digital enhancements. The thing is that today, the interface all necks down to this tiny straw, which is, particularly in terms of output, it’s like poking things with your meat sticks, or using words—either speaking or tapping things with fingers. And in fact, output has gone backwards. It used to be, in your most frequent form, output would be ten-finger typing. Now, it’s like, two-thumb typing. That’s crazy slow communication. We should be able to improve that by many orders of magnitude with a direct neural interface.”

Scenario 3: Humans (indirectly) controlling AI

In this line of thought, which could be labelled as the co-humanist scenario, AI systems are explicitly aiming at following human preferences. This does not merely mean that a human has configured an AI system with what he believes is preferable behaviour, but rather means that an AI learns those human preferences itself. This is an important difference: Bostrom gives an example of this in his book on superintelligence: An AI system might have the task to solve Riemann’s Hypothesis – a famous and yet unproven mathematical hypothesis. To increase the probability of solving the hypothesis, the AI might decide to transform Earth into a single large supercomputer to the detriment of all humans. It will also proactively preempt any attempts to be shut down. This means that as a side effect of trying to solve its goal, the AI system may destroy humanity since humans consume the resources it needs. The example by Bostrom is spectacular but shows a more general pattern: The standard approach to AI is to optimise an objective function and it is often not possible to rule out any negative side effects this might have.

Ruling out any negative side-effects is known as the AI control or AI alignment problem and solving it turns out to be incredibly hard to solve. Some philosophers like Richard David Precht in his recent book on AI and the meaning of Life play down the idea of a superintelligent system being prone to making such simple “errors” – after all, the system is highly intelligent and would act accordingly. However, this is a misconception: AI systems cannot be expected to be human-like and (by default) there is no mechanism for them to detect that they misbehave and may, in fact, unintentionally obliterate humanity.

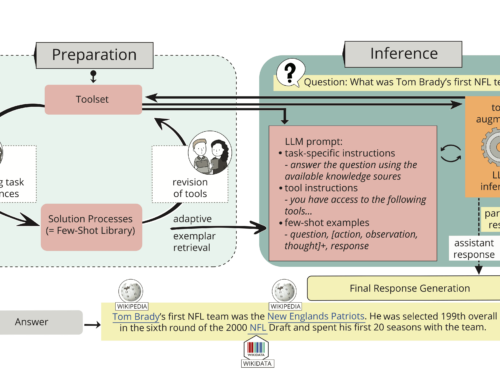

The task-solving performance of an AI system (which is a common view of what it means to be intelligent) is usually decoupled from its alignment with human values. Several researchers have taken this as a starting point for developing AI systems that avoid this decoupling and explicitly aim to align themselves with human values. Stuart Russel in his book “Human Compatible” proposes an interesting approach that has no rigid objective function but continuously learns to match human preferences by observing human behaviour. Deepmind recently introduced Sparrow – a dialogue agent that aims to follow a set of human values. Generally, coupling human alignment with the performance of an AI system should, from my point of view, not be an afterthought when developing a (powerful) AI system, but a primary design consideration. Given the uncertainty about whether and when we see a technological singularity, this is a problem that already needs to be considered in the systems we are building today. While many researchers (like me) are curious about the boundaries of what AI can achieve, this curiosity doesn’t relieve us from our responsibility to think about the potential negative effects of the technology we are developing. Keeping this in mind allows us to develop safer systems, which – at the very least – allow us to stay in this third scenario for long enough to properly understand the consequences of potentially moving to a trans- or post-humanist scenario.

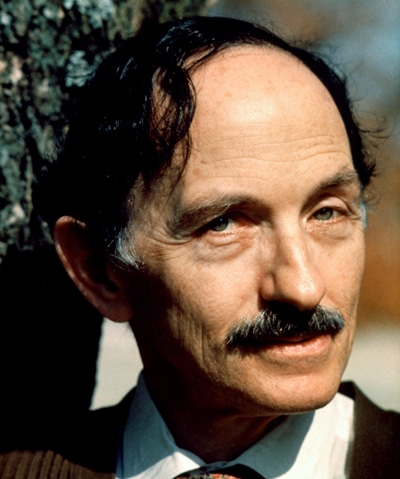

Irving John Good in 1965:

“an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.”

Stuart Russel in 2019:

“the standard model—whereby humans attempt to imbue machines with their own purposes—is destined to fail. We might call this the King Midas problem: Midas, a legendary king in ancient Greek mythology, got exactly what he asked for—namely, that everything he touched should turn to gold. Too late, he discovered that this included his food, his drink, and his family members, and he died in misery and starvation.”

Summary: In the last 66 years, in which Artificial Intelligence exists as a research field, tremendous success has been achieved in various applications. Even though making an accurate prediction is impossible, there is a reasonably high chance that we will have superintelligent AI systems within the next 66 years. Such AI systems might replace humans (posthumanism), integrate with humans (transhumanism) or follow human preferences (co-humanism). As argued above, I believe the latter case is preferable – at least as long as we do not have a strong fundamental understanding of what an AI system outside of human control would do. It is not unlikely that we obtain this level of understanding only after superintelligent AI systems emerge. Given the uncertainty on when and whether superintelligent systems emerge, it is critically important to align major AI systems with human values already today. Much like disciplines like transfer learning have moved to the AI mainstream in the past years, it is therefore required that AI safety also becomes a primary consideration if we want AI to have a positive impact 66 years from now.

Disclaimer: Please note that this blog post can by no means replace reading in more depth about the long-term perspective of AI research (see links below), but is rather meant as a call to AI researchers and practitioners to take AI safety issues seriously already now.

Interesting books if you want to dive deeper:

- “Singulatory is Near” by Ray Kurzweil

- “Superintelligence” by Nick Bostrom

- “Human Compatible” by Stuart Russel (recommended for its approach towards controlling AI)

- “Künstliche Intelligenz und der Sinn des Lebens” by Richard David Precht

- “Rebooting AI” by Gary Marcus and Ernest Davis (recommended for an easy access to the topic)

- “The Alignment Problem” by Brian Christian (recommended for breadth, depth as well as real-world examples)

Sources for quotes and images:

- Title image: sienceabc in the article “What is Artificial Intelligence?“

- Alan Turing quote source: “Artificial Intelligence: A Modern Approach, 4th edition” and image source: Wikimedia Commons

- Marvin Minsky quote source: Afterword to Vernor Vinge’s novel, “True Names”, web version and image source: Wikimedia Commons

- Stephen Hawking quote source: lecture on life in the universe and image source: Wikimedia Commons

- Elon Musk quote source: “What but why?” post on Neuralink and the Brain’s Magical Future and image source: Wikimedia Commons

- Irving John Goog quote source: 1965, Advances in Computers, Volume 6, Speculations Concerning the First Ultraintelligent Machine and image source: Virginia Tech Imagebase

- Stuart Russel quote source: Russel’s book “Human Compatible” and image source: Wikimedia Commons

Thanks to Steve Moyle and Axel Ngonga for proof reading the article before publication.

One Comment

Comments are closed.

I think the most important problem is ignored: misuse of AI for selfish reasons with catastrophic impacts on society.

Consider the case of Russia attacking Ukraine being a stochastic game where Putin thought he would optimize his benefits – to the detriment of Ukrainian *and* Russian citizens – and therefore chose to attack. An AI could make a similar detrimental decision only faster expecting beneficial outcome for its master and being completely aligned with the objectives of its master. Asimovs 3 laws (plus his 4th zeroth law) were supposed to contain such situation, but if there is a master that does not want such rules to uphold, who would be able to guard these rules?

Hence, I have a hard time to imagine that a superintelligence could contain political crisis. Indeed, I believe we do have enough intelligence to know how to do things, but not sufficient political will for said selfish reasons. To put it otherwise, politics having to deal with opposing objectives will not go away by no amount of intelligence.

Side note: the example attributed to Bostrom was already conceived by Asimov around 1950 in his star diaries, albeit with only slightly different objectives given to the AI.